Identifying Privacy Vulnerabilities in Key Stages of Computer Vision, Natural Language Processing, and Voice Processing Systems

Keywords:

Artificial Intelligence, Computer Vision, Data Collection, Natural Language Processing, Privacy Concerns, Privacy Risks, Voice ProcessingAbstract

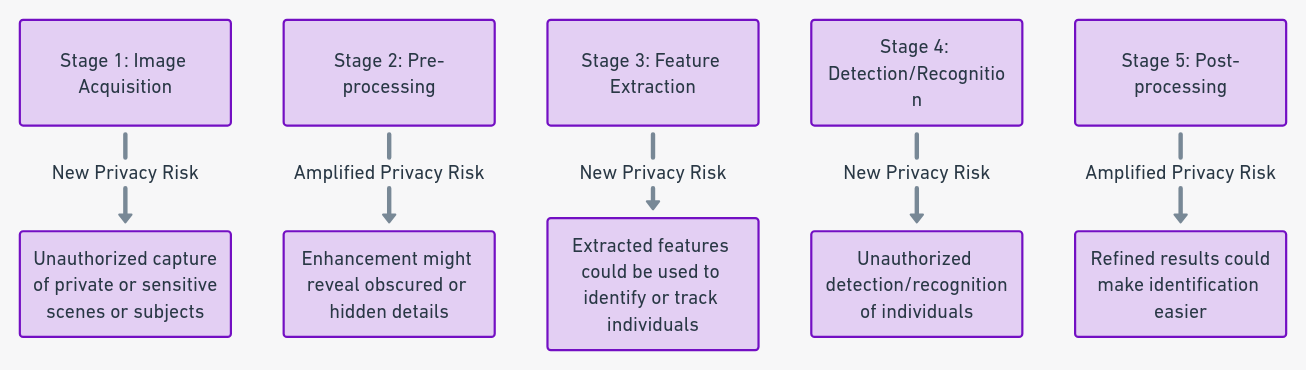

The core of many Artificial Intelligence algorithms lies in their requirement for extensive datasets, often comprising personal information, to function effectively. This necessity raises immediate concerns about potential infringements on individual privacy. This research aims to analyze the privacy concerns and risks associated with three major subdomains in the field of Artificial Intelligence (AI): Computer Vision, Natural Language Processing (NLP), and Voice Processing Systems. Each subdomain was broken down into multiple stages to scrutinize the inherent privacy vulnerabilities present. In Computer Vision, risks range from unauthorized image acquisition to the potential misuse of visual data when integrated with larger platforms. Attentions were paid to feature extraction and object detection stages, which can lead to unauthorized profiling or tracking. In NLP workflow, unauthorized data collection and the risk of data leakage through feature extraction are highlighted. The potential for adversarial attacks during the deployment stage and risks associated with post-deployment monitoring are also examined. Finally, in Voice Processing Systems, the risks tied to unauthorized data collection and potential identification of individuals through data preprocessing are discussed. Concerns related to human annotators in data annotation and the unintended memorization of specific voice inputs during model training are also explored. Each stage was analyzed in terms of whether it presented a new type of privacy risk or amplified existing risks. The objective is to provide a structured framework that comprehensively categorizes privacy risks in these AI subdomains, thereby facilitating future research and the development of more secure and privacy-preserving AI technologies.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2021 International Journal of Business Intelligence and Big Data Analytics

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.